This test case makes use of the fixture strategies setUp() and tearDown() to create EntityManagerFactory and EntityManager situations using the Java SE bootstrap API and then closes them when the test completes. The test case additionally makes use of these strategies to seed the database with test data and remove it when the test completes. The tearDown() methodology is guaranteed to be known as even when a test fails as a end result of an exception. Like any JPA software within the Java SE setting, a persistence.xml file will have to be on the classpath to ensure that the Persistence class to bootstrap an entity supervisor factory. The file should contain the JDBC connection properties to connect with the database, and if the managed lessons were not already listed, class elements would additionally need to be added for each managed class. If the transaction type was not specified, will most likely be defaulted to the correct transaction sort according to the setting; in any other case, it should be set to RESOURCE_LOCAL. This instance demonstrates the fundamental sample for all integration tests that use an entity supervisor. The benefit of this type of take a look at versus a unit test is that no effort was required to mock up persistence interfaces. Emulating the entity supervisor and question engine in order to take a look at code that interacts immediately with these interfaces suffers from diminishing returns as more and more effort is put into getting ready a test surroundings instead of writing exams. In the worst-case situation, incorrect check outcomes happen due to bugs within the check harness, not within the software code. Given the ease with which JPA can be used exterior the applying server, this sort of effort could also be higher spent establishing a easy database check environment and writing automated functional checks. However, regardless of the opportunity that testing outside the applying server presents, care have to be taken to ensure that such testing actually provides value. Quite often, developers fall into the lure of writing checks that do little more than test vendor performance as opposed to true application logic.

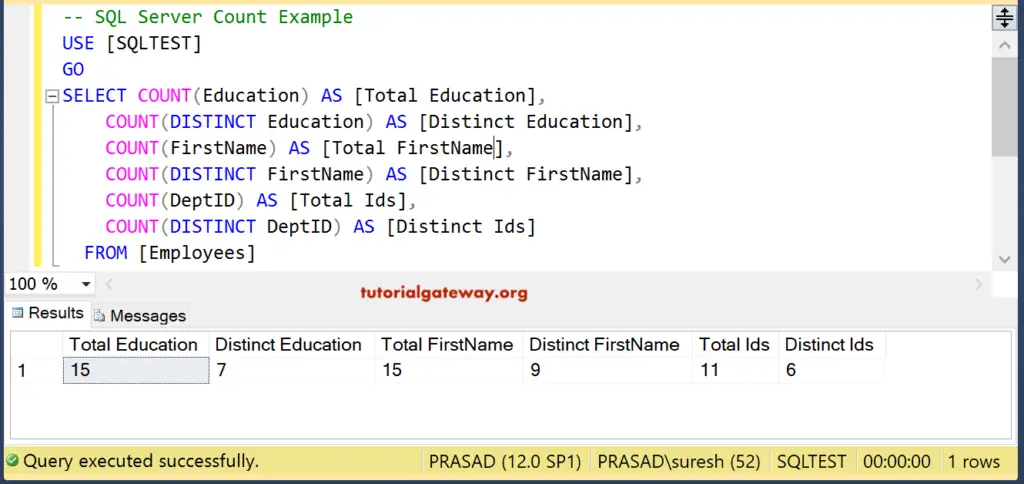

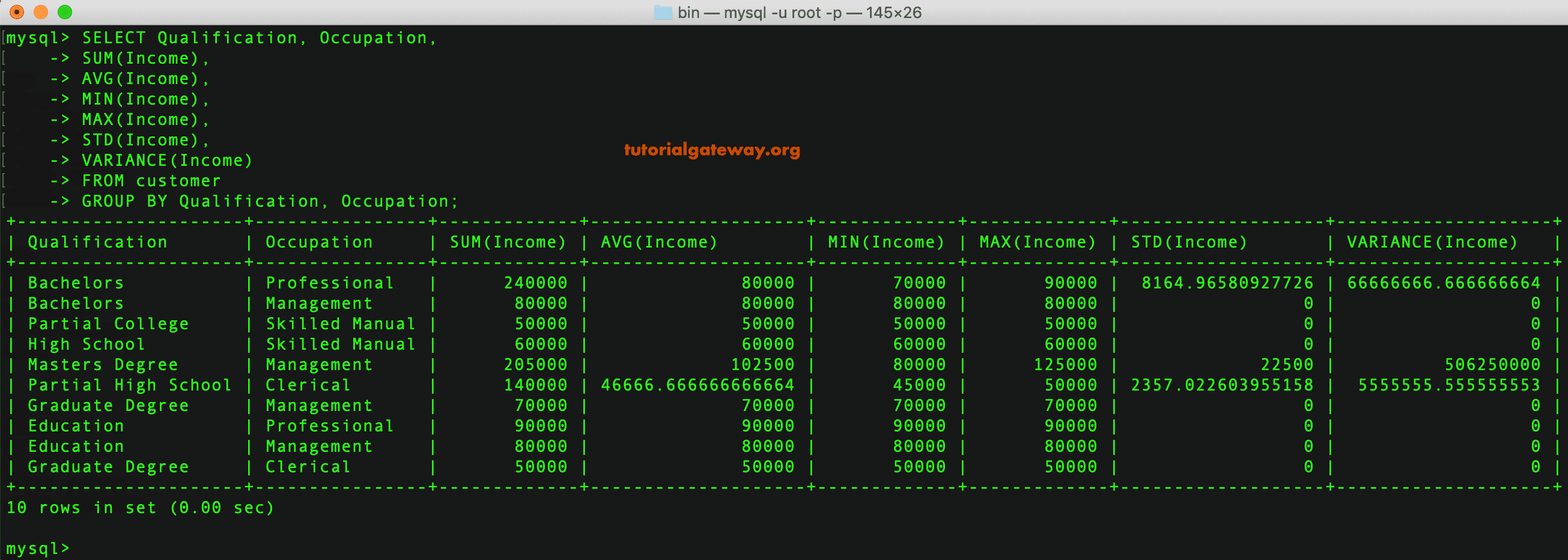

An example of this error is seeding a database, executing a question, and verifying that the specified outcomes are returned. It sounds valid at first, however all that it checks is the developer's understanding of tips on how to write a query. Unless there's a bug in the database or the persistence supplier, the check will never fail. Note that each of the question interfaces outline the identical set of strategies and differ solely in their return sorts. The easiest type of query execution is through the getResultList() method. If the question did not return any data, the collection is empty. The return type is specified as a List instead of a Collection so as to support queries that specify a sort order. If the query makes use of the ORDER BY clause to specify a kind order, the results shall be put into the result record in the identical order. Listing 7-14 demonstrates how a question might be used to generate a menu for a command-line utility that displays the name of each employee working on a project in addition to the name of the division that the employee is assigned to. Iterating over Sorted Results public void displayProjectEmployees The getSingleResult() methodology is offered as a convenience for queries that return solely a single worth. Instead of iterating to the primary result in a group, the thing is instantly returned.

It is necessary to note, however, that getSingleResult() behaves in a unique way from getResultList() in how it handles surprising outcomes. Whereas getResultList() returns an empty assortment when no results can be found, getSingleResult() throws a NoResultException exception. Therefore if there is a chance that the specified end result will not be discovered, then this exception must be handled. If multiple results are available after executing the query instead of the only anticipated outcome, getSingleResult() will throw a NonUniqueResultException exception. Again, this might be problematic for software code if the query criteria could result in more than one row being returned in sure circumstances. Although getSingleResult() is handy to use, be certain that the query and its attainable results are properly understood, otherwise utility code might have to cope with an unexpected runtime exception. Unlike different exceptions thrown by entity manager operations, these exceptions will not cause the supplier to roll again the present transaction, if there is one. Any SELECT question that returns knowledge by way of the getResultList() and getSingleResult() strategies may also specify locking constraints for the database rows impacted by the query. This facility is uncovered via the question interfaces through the setLockMode() method. We will defer discussion of the locking semantics for queries till the full dialogue of locking in Chapter 11. Query and TypedQuery objects could additionally be reused as usually as wanted so long as the identical persistence context that was used to create the query remains to be lively. For transaction-scoped entity managers, this limits the lifetime of the Query or TypedQuery object to the life of the transaction.

Other entity supervisor types may reuse them until the entity supervisor is closed or eliminated. Lazy Field Loading @Entity public class Employee We are assuming in this example that purposes will seldom access the comments in an employee report, so we mark it as being lazily fetched. Note that in this case the @Basic annotation just isn't solely present for documentation functions but also required in order to specify the fetch kind for the field. Configuring the feedback subject to be fetched lazily will permit an Employee instance returned from a question to have the feedback area empty. The software doesn't have to do anything special to get it, however. By simply accessing the comments subject, will in all probability be transparently read and stuffed in by the provider if it was not already loaded. Before you employ this function, you should be aware of a few pertinent points about lazy attribute fetching. First and foremost, the directive to lazily fetch an attribute is meant only to be a touch to the persistence supplier to assist the appliance obtain better efficiency. The provider isn't required to respect the request as a result of the behavior of the entity just isn't compromised if the provider goes forward and loads the attribute. The converse isn't true, though, as a outcome of specifying that an attribute be eagerly fetched could be critical to with the ability to entry the entity state once the entity is detached from the persistence context. We will talk about detachment extra in Chapter 6 and discover the connection between lazy loading and detachment. Second, on the surface it might appear that this is a good thought for sure attributes of an entity, however in follow it's almost by no means a good idea to lazily fetch simple sorts. There is little to be gained in returning only a part of a database row unless you would possibly be sure that the state won't be accessed in the entity later on. The solely instances when lazy loading of a primary mapping ought to be thought-about are when there are numerous columns in a table or when the columns are large . It might take vital assets to load the data, and not loading it may save quite lots of effort, time, and resources.

Unless both of those two cases is true, in the majority of instances lazily fetching a subset of object attributes will find yourself being more expensive than eagerly fetching them. Lazy fetching is kind of related in terms of relationship mappings, though, so we might be discussing this topic later in the chapter. Entity Manager In the "Entity Overview" part, it was stated that a particular API call must be invoked before an entity really gets persisted to the database. In reality, separate API calls are needed to carry out lots of the operations on entities. This API is carried out by the entity manager and encapsulated nearly entirely within a single interface known as EntityManager. When all is alleged and carried out, it is to an entity manager that the true work of persistence is delegated. Until an entity supervisor is used to actually create, read, or write an entity, the entity is nothing more than an everyday Java object. When an entity supervisor obtains a reference to an entity, either by having it explicitly passed in as an argument to a method name or as a outcome of it was learn from the database, that object is said to be managed by the entity manager. The set of managed entity situations inside an entity supervisor at any given time is identified as its persistence context. Only one Java instance with the identical persistent identity might exist in a persistence context at any time. For example, if an Employee with a persistent id of 158 exists in the persistence context, then no other Employee object with its id set to 158 could exist within that very same persistence context. Entity managers are configured to find a way to persist or handle specific types of objects, read and write to a given database, and be implemented by a specific persistence provider . It is the supplier that supplies the backing implementation engine for the whole Java Persistence API, from the EntityManager through to implementation of the question classes and SQL technology. All entity managers come from factories of kind EntityManagerFactory. The configuration for an entity supervisor is templated from the EntityManagerFactory that created it, but it is defined separately as a persistence unit. A persistence unit dictates either implicitly or explicitly the settings and entity lessons used by all entity managers obtained from the distinctive EntityManagerFactory instance bound to that persistence unit. There is, therefore, a one-to-one correspondence between a persistence unit and its concrete EntityManagerFactory. Persistence models are named to permit differentiation of one EntityManagerFactory from one other.

This gives the application control over which configuration or persistence unit is to be used for operating on a particular entity. Summary Entity mapping requirements typically go well past the simplistic mappings that map a subject or a relationship to a named column. We illustrated how delimiting identifiers allows the inclusion of particular characters and supplies case-sensitivity when the target database requires it. We showed how embeddable objects can have state, factor collections, additional nested embeddables, and even relationships. We gave examples of reusing an embeddable object with relationships in it by overriding the relationship mappings within the embedding entity. We revealed the two approaches for outlining and using compound major keys, and demonstrated using them in a method that's compatible with EJB 2.1 main key classes. We established how different entities can have foreign key references to entities with compound identifiers and explained how a number of join columns can be used in any context when a single join column applies. We additionally showed some examples of mapping identifiers, known as derived identifiers, which included a relationship as a part of their identities. We defined some advanced relationship features, similar to read-only mappings and optionality, and showed how they could be of benefit to some models. We then went on to explain a few of the more advanced mapping scenarios that included utilizing be a part of tables or sometimes avoiding the use of join tables. The matter of orphan elimination was also touched upon and clarified. We went on to show how to distribute entity state throughout a number of tables and tips on how to use the secondary tables with relationships. We even noticed how an embedded object can map to a secondary table of an entity. The ResultSet and RowSet interfaces are a priority because there is no logical equal to these buildings in JPA. Results from JP QL and SQL queries executed through the Query interface are basic collections. Even although we are in a position to iterate over a group, which is semantically much like the row position operations of the JDBC API, every factor in the collection is an object or an array of objects. There is not any equivalent to the column index operations of the ResultSet interface.

Emulating the ResultSet interface excessive of a set is unlikely to be a worthwhile enterprise. Although some operations could probably be mapped directly, there is no generic approach to map the attributes of an entity to the column positions wanted by the appliance code. There is also no assure of consistency in how the column positions are determined; it may be different between two queries that achieve the identical goal however have ordered the SELECT clause in one other way. Even when column names are used, the application code is referring to the column aliases of the query, not necessarily the true column names. In mild of these points, our aim in planning any migration from JDBC is to isolate the JDBC operations so that they can be replaced as a gaggle as opposed to accommodating enterprise logic that depends on JDBC interfaces. Refactoring the present utility to break its dependencies on the JDBC interfaces is usually going to be the simplest path forward. Regarding the SQL usage in a JDBC application, we need to warning that although JPA helps SQL queries, it's unlikely that this will be a major profit for migrating an existing software. There are a number of reasons for this, however the first to consider is that nearly all SQL queries in a JDBC utility are unlikely to return results that map on to the area model of a JPA application. As you learned in Chapter eleven, to assemble an entity from a SQL query requires all the data and international key columns to be returned, regardless of what will finally be required by the application code at that time limit. If the overwhelming majority of SQL queries need to be expanded to add columns necessary to fulfill the requirements of JPA and if they then must be mapped before they can be utilized, rewriting the queries in JP QL is probably a better investment of time. The syntax of a JP QL question is simpler to read and construct, and instantly maps to the domain model you wish to introduce to the appliance. The entities have already been mapped to the database, and the supplier is conscious of the means to construct environment friendly queries to acquire the data you need. SQL queries have a job, but they need to be the exception, not the rule. There are many Java EE design patterns that can assist on this train if the appliance has made use of them, or can be simply refactored to incorporate them. We might be exploring a number of of those intimately later in the chapter, however it's worth mentioning no much less than a few now in the context of JDBC applications particularly. The first and most necessary sample to contemplate is the Data Access Object sample. This cleanly isolates the JDBC operations for a particular use case behind a single interface that we are in a position to migrate forward. Next, consider the Transfer Object pattern as a method of introducing an abstraction of the row and column semantics of JDBC into a more pure object mannequin. When an operation returns a collection of values, don't return the ResultSet to the shopper.

Construct transfer objects and build a new assortment just like the results of the Query operations in JPA. These steps can go a great distance toward creating boundary points where JPA could be introduced with out having a serious impression on the application logic. Many-to-one To create a many-to-one mapping for a area or property, the many-to-one element may be specified. This factor corresponds to the @ManyToOne annotation and, like the fundamental mapping, has fetch, optional and entry attributes. Normally the target entity is understood by the provider as a result of the sector or property is sort of all the time of the target entity kind, but if not then the target-entity attribute also wants to be specified. When the many-to-one international key contributes to the identifier of the entity and the @MapsId annotation described in Chapter 10 applies then the maps-id attribute would be used. The worth, when required, is the name of the embeddable attribute of the embedded id class that maps the foreign key relationship. If, however, the relationship is part of the identifier but a easy @Id would be utilized to the relationship subject or property, the boolean id attribute of the many-to-one component should be specified and set to true. A join-column element may be specified as a subelement of the many-to-one component when the column name is completely different from the default. If the affiliation is to an entity with a compound major key, multiple join-column elements might be required. Mapping an attribute using a many-to-one factor causes the mapping annotations that may have been present on that attribute to be ignored.

All the mapping information for the connection, including the be a part of column info, have to be specified or defaulted throughout the many-to-one XML factor. Instead of a be a part of column, it is potential to have a many-to-one or one-to-many relationship that uses a be a part of desk. It is for this case that a join-table factor may be specified as a subelement of the many-toone component. The join-table element corresponds to the @JoinTable annotation and incorporates a set of join-column components that be part of to the owning entity, which is generally the many-to-one side. A second set of be a part of columns joins the be part of table to the inverse aspect of the connection. In the absence of 1 or each of these, the default values will be utilized. Unique to relationships is the ability to cascade operations across them. The cascade settings for a relationship dictate which operations are cascaded to the goal entity of the many-to-one mapping. To specify how cascading should happen, a cascade component must be included as a subelement of the manyto-one component. Within the cascade factor, we can embody our alternative of empty cascade-all, cascadepersist, cascade-merge, cascade-remove, cascade-refresh, or cascade-detach subelements that dictate that the given operations be cascaded. Of course, specifying cascade components in addition to the cascade-all element is just redundant. Now we come to an exception to our rule that we gave earlier after we stated that overriding of mappings will sometimes be for bodily data overrides. When it involves relationships, there are occasions the place it could be greatest to check the efficiency of a given operation and would like to find a way to set certain relationships to load eagerly or lazily. You won't want to go through the code and need to hold changing these settings back and forth, however. It can be extra sensible to have the mappings that you are tuning in XML and simply change them when required.three Listing shows overriding two manyto-one relationships to be lazily loaded. Instead of injecting an entity supervisor, we are injecting an entity supervisor manufacturing unit. Prior to searching for the entity, we manually create a brand new application-managed entity supervisor utilizing the manufacturing unit. Because the container doesn't handle its lifecycle, we have to close it later when the bean is eliminated during the name to finished(). Like the container-managed extended persistence context, the Department entity stays managed after the decision to init(). When addEmployee() is called, there could be the extra step of calling joinTransaction() to inform the persistence context that it ought to synchronize itself with the current JTA transaction.

Without this call, the changes to Department wouldn't be flushed to the database when the transaction commits. Because application-managed entity managers do not propagate, the one way to share managed entities with other elements is to share the EntityManager occasion. This could be achieved by passing the entity manager around as an argument to local methods or by storing the entity supervisor in a common place such as an HTTP session or singleton session bean. Listing 6-13 demonstrates a servlet creating an application-managed entity manager and utilizing it to instantiate the EmployeeService class we defined in Chapter 2. In these cases, care must be taken to ensure that entry to the entity manager is done in a thread-safe manner. While EntityManagerFactory cases are thread-safe, EntityManager cases are not. Also, application code must not call joinTransaction() on the identical entity supervisor in multiple concurrent transactions. With this modification made, the department manager bean now works as anticipated. Extended entity managers create a persistence context when a stateful session bean instance is created that lasts till the bean is eliminated. Because the Department entity remains to be managed by the identical persistence context, each time it's used in a transaction any modifications might be automatically written to the database.